A walk through of a simple Artificial Neural Network (ANN) with training and testing using numbers

Let’s walk through a simple Artificial Neural Network (ANN) with training and testing using numbers, focusing on a small subset for clarity.

Network Structure:

- Input Layer: Neurons (x1, x2) – Receives data point values.

- Hidden Layer: 2 Neurons (h1, h2) – Applies ReLU activation.

- Output Layer: 1 Neuron (output) – Uses Softmax function for binary classification (probability distribution).

Data Point:

- x1 = 0.5

- x2 = 1.0

Weight Matrices:

- Input to Hidden Layer (W_hidden):

Forward Pass (Calculation):

- Input Layer: No calculation, these values are passed directly.

Input Vector (X):

- x1 = 0.5

- x2 = 1.0

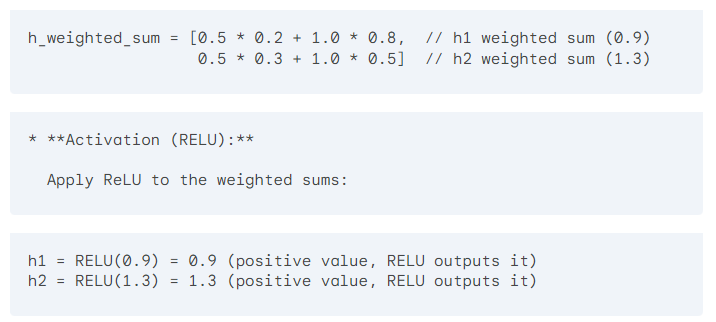

- Hidden Layer:

- Weighted Sum: We can calculate the weighted sum for both hidden layer neurons using matrix multiplication:

3. Output Layer:

- Weighted Sum: Calculate the weighted sum for the output neuron:

Softmax Function (Detailed Explanation):

The Softmax function takes the output_weighted_sum (2.2 in this case) and transforms it into a probability distribution between 0 and 1.

Here’s the breakdown:

- Calculate Exponentials:

- exp(output_weighted_sum) = exp(2.2) ≈ 9.071

- Calculate Sum of Exponentials:

- Since there’s only one element, the sum is the value itself (9.071).

- Normalize by Sum:

- output = exp(output_weighted_sum) / sum(exp(output_weighted_sum))

- output ≈ 9.071 / 9.071 ≈ 1.0 (This represents probability for Class 1)

Complete Output:

Based on the calculations, the network outputs a value close to 1.0 for the Softmax function, which can be interpreted as a high probability for a specific class (depending on the training data). This probability represents the likelihood of the data point belonging to that class after considering the weighted influences from the hidden layer neurons (h1 and h2).