Convolutional Layers in AI Model Training: A Deep Dive into 3×3 Filters

A Convolutional Layer is a fundamental building block of Convolutional Neural Networks (CNNs), primarily used in image processing tasks such as image classification, object detection, and image segmentation. This layer applies convolution operations to the input data, allowing the network to learn spatial hierarchies of features automatically and adaptively from the input images.

Key Concepts:

- Filters/Kernels:

- A filter, also known as a kernel, is a small matrix used to transform input data by sliding over it.

- Typically, filters are of size 3×3, 5×5, etc.

- Each filter detects a specific feature, such as edges, corners, or textures.

- Stride:

- Stride refers to the number of pixels by which the filter moves over the input data.

- A stride of 1 means the filter moves one pixel at a time, while a stride of 2 means it moves two pixels at a time.

- Padding:

- Padding involves adding extra pixels around the border of the input image.

- This ensures that the filter fits properly when it slides over the edges of the image.

- Common padding types are ‘valid’ (no padding) and ‘same’ (padding to keep the output size the same as the input).

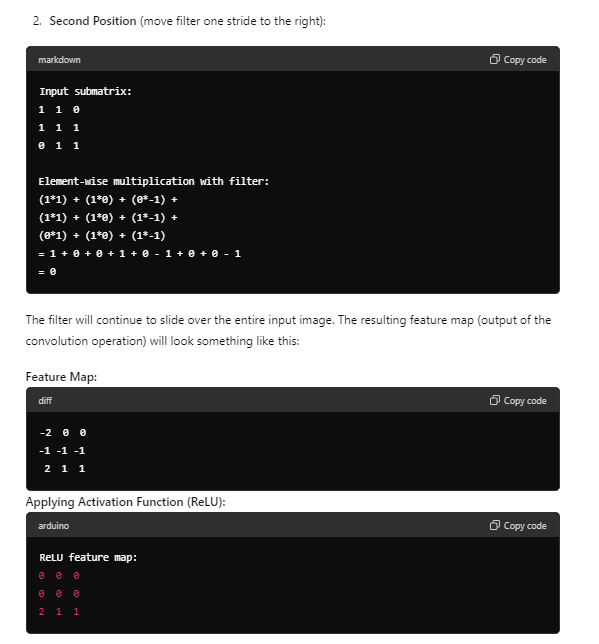

- Activation Function:

- After the convolution operation, an activation function like ReLU (Rectified Linear Unit) is applied to introduce non-linearity into the model.

- ReLU replaces all negative pixel values in the feature map with zero, increasing computational efficiency and mitigating the vanishing gradient problem.

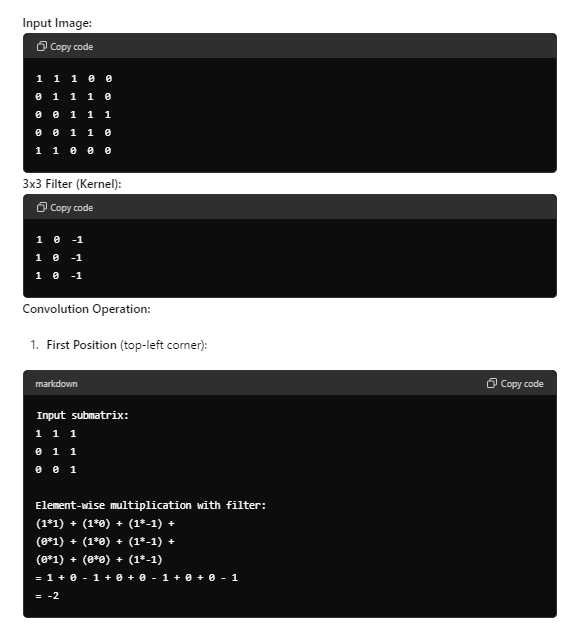

Example: Convolution Operation with a 3×3 Filter

Let’s walk through an example of how a convolution operation works with a 3×3 filter on a 5×5 input image.

Training the Model:

- Forward Pass:

- Convolution layers extract features from input images by applying filters and activation functions.

- These features are passed through subsequent layers (e.g., more convolutional layers, pooling layers, fully connected layers) to capture higher-level patterns.

- Loss Calculation:

- The final layer produces predictions (e.g., probabilities of different classes).

- A loss function (e.g., cross-entropy loss) compares the predictions with the actual labels to calculate the error.

- Backward Pass

- Compute Loss: Calculate the loss using a loss function (e.g., cross-entropy loss for classification tasks).

- Calculate Gradients:

- Gradients of the loss with respect to the output of the network are computed first.

- These gradients are then propagated backward through the network, layer by layer.

- Update Parameters:

- For each filter in the convolutional layers, calculate the gradient of the loss with respect to the filter weights.

- Use these gradients to update the filter weights using an optimization algorithm (e.g., stochastic gradient descent or Adam).

Summary

- The convolutional layer is crucial in extracting spatial features from input images.

- It uses filters/kernels to perform convolution operations, detecting patterns like edges.

- Activation functions like ReLU introduce non-linearity.

- During training, the model adjusts filter weights to minimize prediction errors, making the network better at recognizing patterns in images.