Understanding Softmax Activation: Converting Logits to Probabilities with Numerical

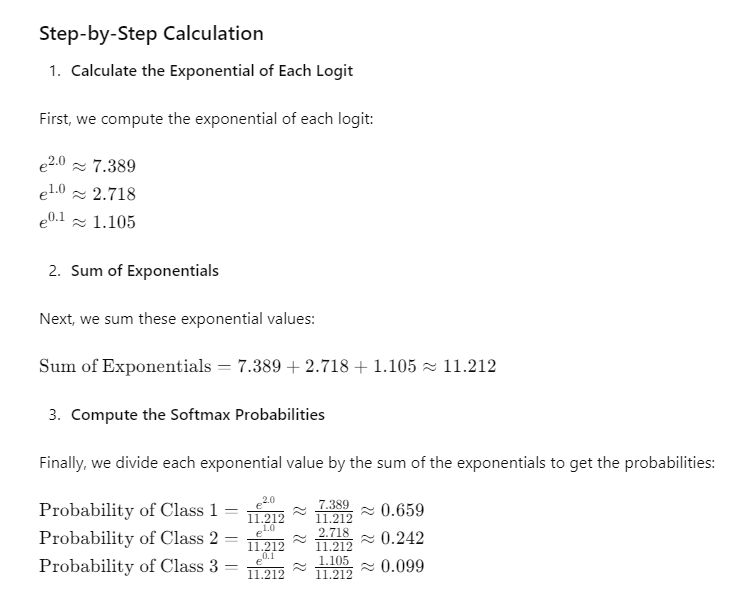

Let’s go through a numerical example to see how the softmax function converts the raw scores (logits) from the second-to-last layer into probabilities.

Example

Assume the second-to-last layer of a neural network outputs the following values for a particular input image:

Logits=[2.0,1.0,0.1]\text{Logits} = [2.0, 1.0, 0.1]Logits=[2.0,1.0,0.1]

These values represent the raw scores for three classes before applying the softmax function. We’ll convert these logits into probabilities using the softmax function.

Probabilities=[0.659,0.242,0.099]

This means that, according to the neural network, the input image has approximately:

- 65.9% chance of belonging to Class 1,

- 24.2% chance of belonging to Class 2, and

- 9.9% chance of belonging to Class 3.

Here is a simple Java example that demonstrates the softmax calculation:

import java.util.Arrays;

public class SoftmaxExample {

public static void main(String[] args) {

double[] logits = {2.0, 1.0, 0.1};

double[] probabilities = softmax(logits);

System.out.println("Logits: " + Arrays.toString(logits));

System.out.println("Probabilities: " + Arrays.toString(probabilities));

}

public static double[] softmax(double[] logits) {

double[] expValues = new double[logits.length];

double sumExp = 0.0;

// Compute exponentials and their sum

for (int i = 0; i < logits.length; i++) {

expValues[i] = Math.exp(logits[i]);

sumExp += expValues[i];

}

// Compute softmax probabilities

for (int i = 0; i < logits.length; i++) {

expValues[i] /= sumExp;

}

return expValues;

}

}